Review of the Arts and AI Panel Discussion by Clone Wen (cw4295@nyu.edu)

On November 9th, 2023, the Center for Comparative Media (CCM) organized the Arts and AI panel “Arts and AI: Past, Present, and Future,” featuring Antonio Somaini (Paris 3 Sorbonne Nouvelle) and Kate Crawford (USC Annenberg), moderated by Noam M. Elcott at Columbia University. From the birth of the first AI chatbot in the 1960s to today’s disputes about GPT4 and LAION-5B, the doors are closing throughout the AI generative networks industry, with little or no information about what its trained on. The panel discussed the current development of AI and the arts through latent space visualizations, invisibility and inaccessibility in latent spaces, and the inhuman epistemic anxiety in the created invisibilities. The panel discussed how the arts and AI got to this place with massively complex systems.

Latent Space Visualizations on Generative Networks

Antonio Somaini begins with the possibility of reconstructing a history cutting across the 20th century through AI visualization of all these attempts to enhance, de-center, and replace human vision for technology. “In the AI visual culture, we need to unlearn how to see like humans.” Machine vision systems—those first generative algorithms—were profoundly transforming not only the ways in which images are seen but also the ways in which images are generated and loaded.

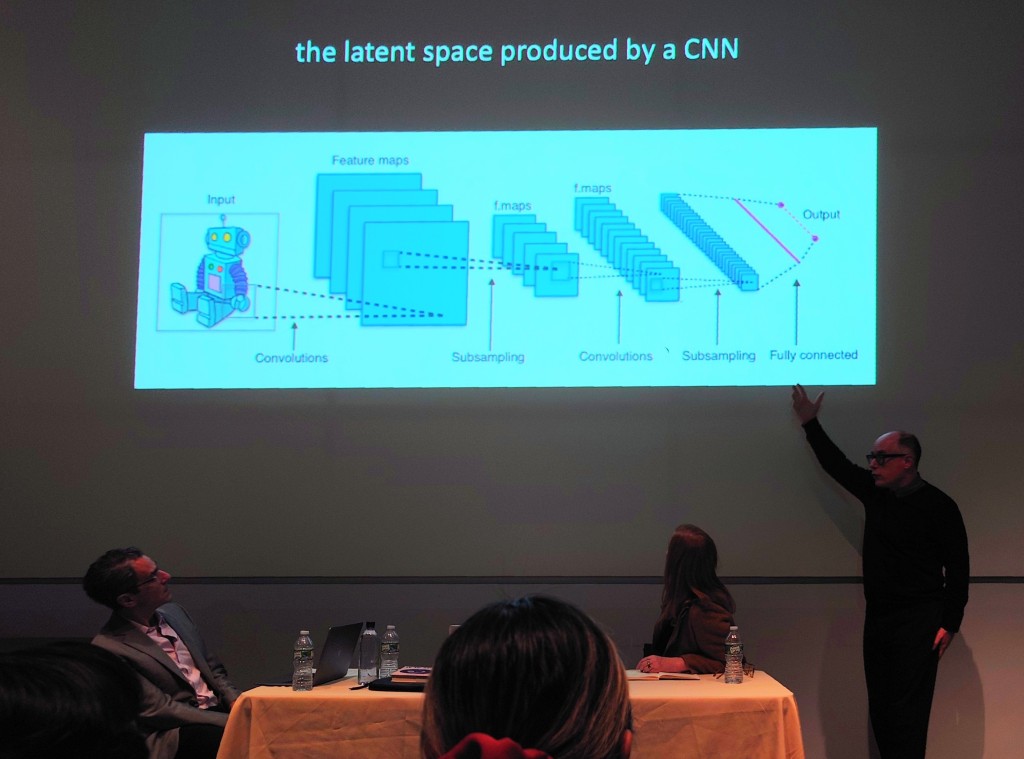

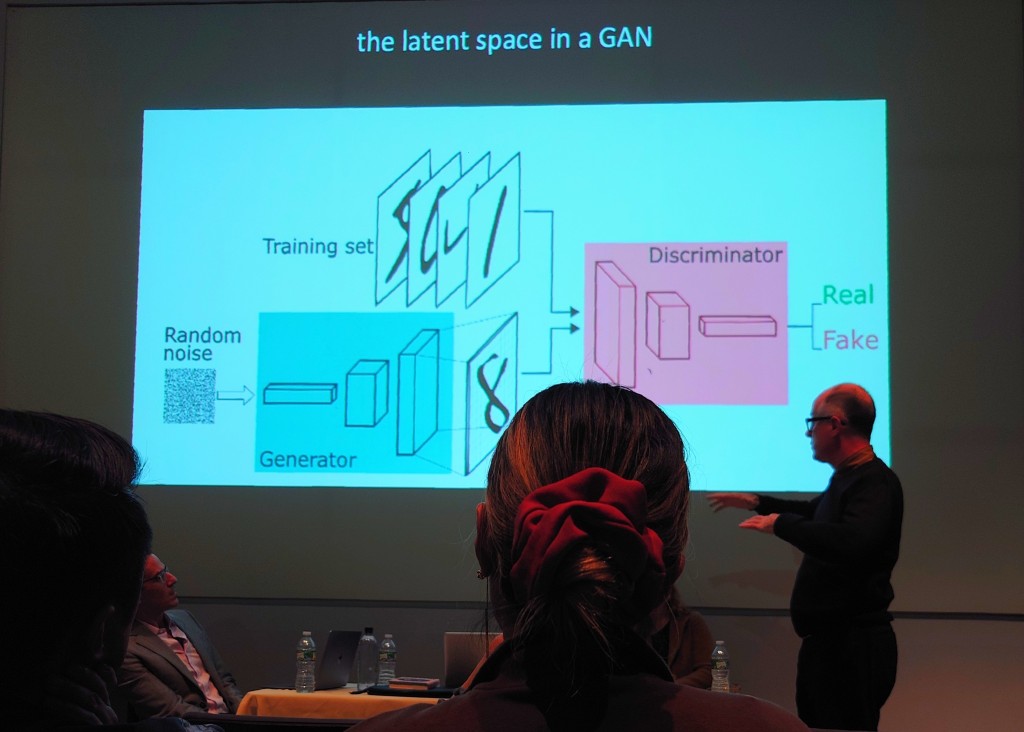

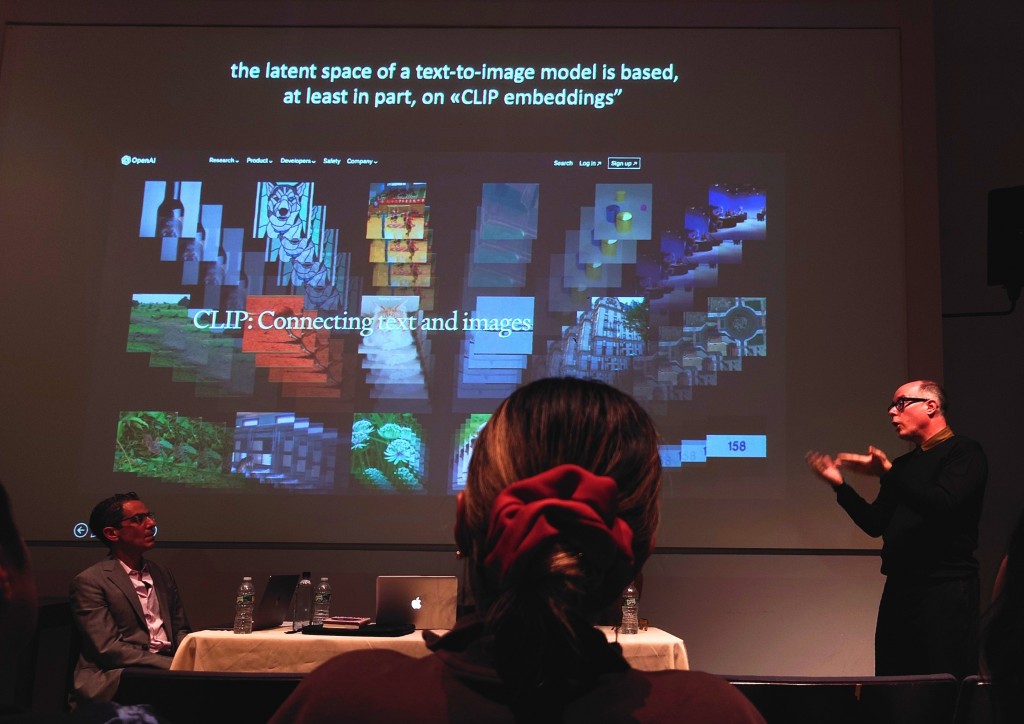

Tracing the history of art and AI, there are three moments of developments, and all of them are coexisting at this point. The first one is Convolutional Neural Networks(CNN), used mostly for machine vision technologies. The second phase comes with the Generative Adversarial Networks (GAN), which began in 2016 to generate images. And the last phase is the text-to-image models released in 2022, which is now being developed into text-to-video models.

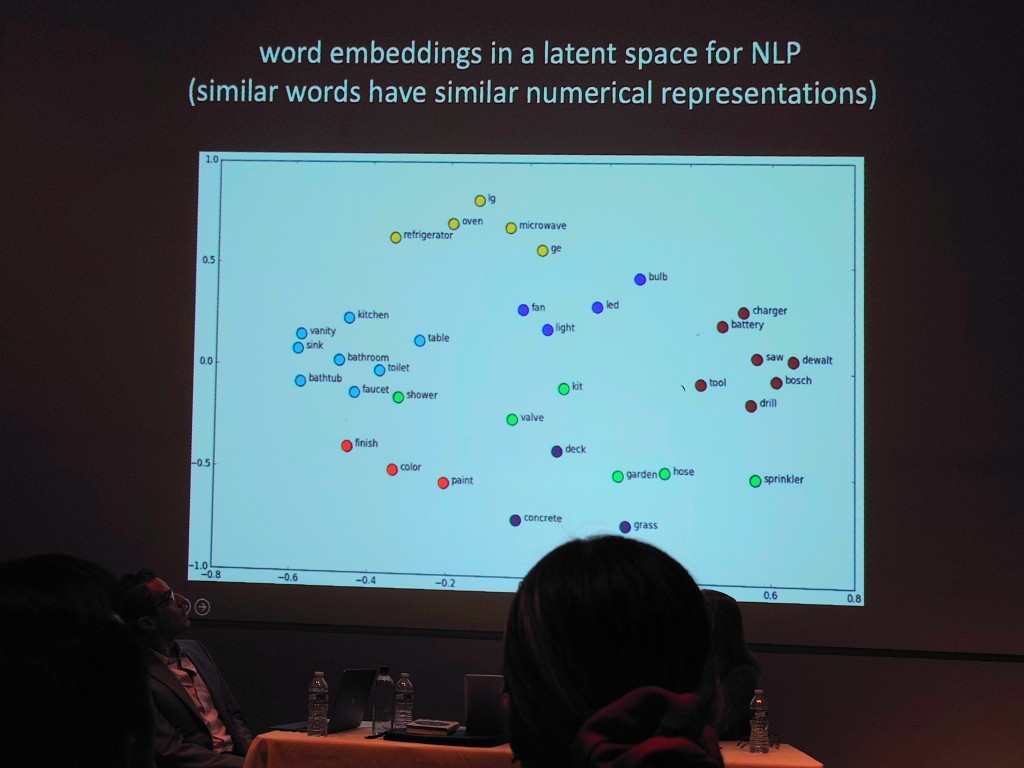

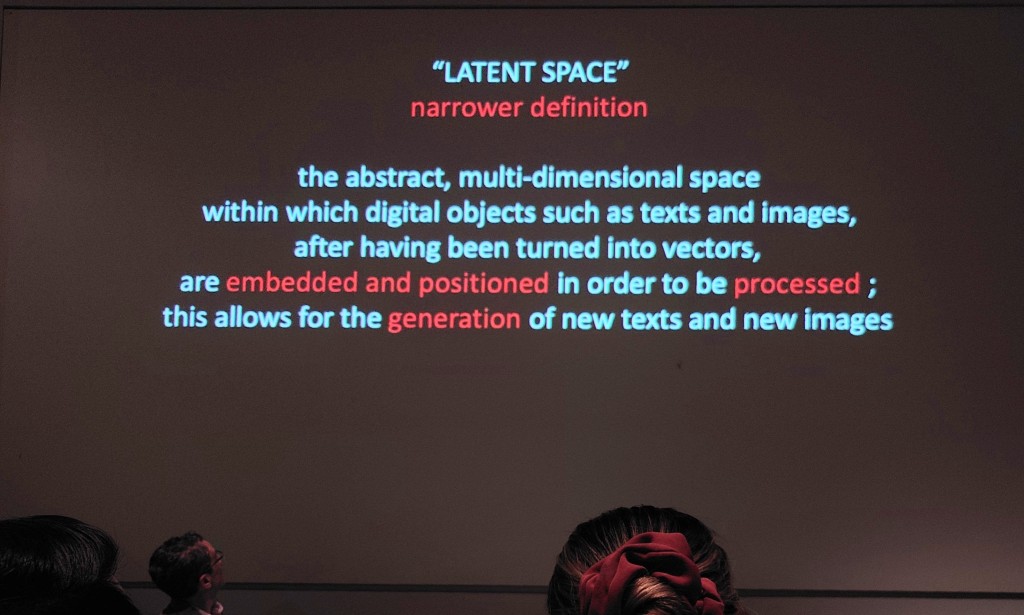

Moving images are often combined with other forms of image-to-image translation or even image-to-text translation, starting from an image and generated in the script. Now each one of these three big categories of deep learning algorithms is positioned in relation to distance and proximity to one another. For a large language model, in each and every dimension of latent space, words that are used more often in connection to one another are in a closer region of their latent space, whereas on the contrary, words that are distant from one another are also more distant in latent space. A possible visualization of the latent space used for natural language processing is a space that can have hundreds of dimensions. In fact, for stable diffusion, there are 768 dimensions.

The convolutional neural networks (CNN) start to analyze an image in its original dimension and then learn to analyze features that are more and more reduced. As convolutional neural networks (CNN) get to this last layer, which is the latent space, in which the essential features of an image have been abstracted so that the model can pronounce its output and say whether the image is. Then, the generative adversarial networks (GAN) come to find it between the random noises in the latent space. At that point, a latent vector is given to this model, which starts producing images that it then submits to a discriminator that has been trained with a training set to decide whether the images that are submitted are or are not part of the training set.

Billions of images are connected to various kinds of texts. In producing all the AI images, the generator learns how to explore the mating space it’s been given and produces different kinds of images. When the generative models could produce their own latent space through training, they created their own latent space visualizations.

Creating Invisibilities in Latent Space

The multi-dimensional latent space is not transparent. The argument lies in certain banned prompts in generative art, like words related to porn, violence, or discrimination, which are learned to be banned, creating areas of invisibility in latent space. Culture is more and more turned into censorship and political vectors, processed, and then generated after other inaccessible cultural conflicts. So cultural transmission is strongly influenced not only by the presence of these latent spaces but also by all the forbidden prompts and images that condition regeneration.

Antonio Somaini mentioned an iteration of forbidden images in a way using a big term, a new form of iconoclasts in a way. He started with the example that if you write fascist with the spelling mistake without the S, or “men with no clothes on the streets,” still no-so-related images appear. So, there is a pre-prompt or hidden prompt that somehow corrects the haunting by the users to make sure that certain images are not generated. The secret addition of words, what’s called pre-prompt injection, has led to several critical projects on ImageNet, Google, and OpenAI.

Inhuman Epistemic Anxiety in Created Invisibilities

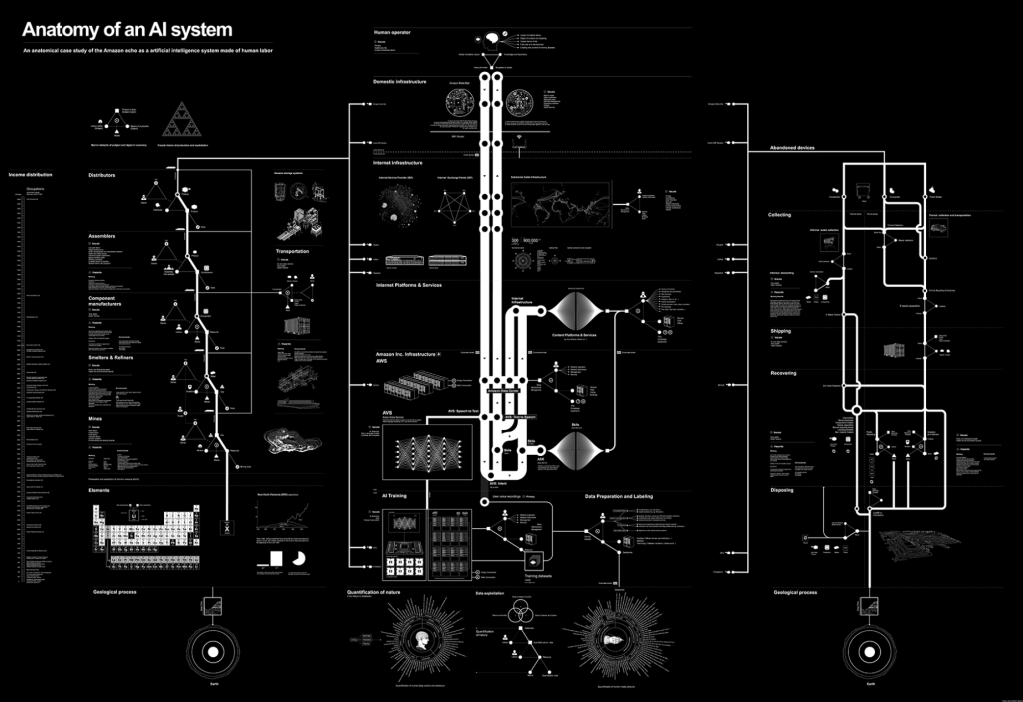

Art and art history have long been open to non-human images, and many images are made for divine vision but not human vision, with no intention for a human audience. Kate Crawford introduces the idea of enchanted determinism and dates the phenomenon of perceiving artificial intelligence as being divine, alien, godlike, and deterministic to the 1960s, when Joseph Weizenbaum at MIT developed the first chatbot. But even the data set of billions of images, Kate argued, like LAION-5B (an open large-scale dataset for training next-generation image-text models), is far from being the entire internet.

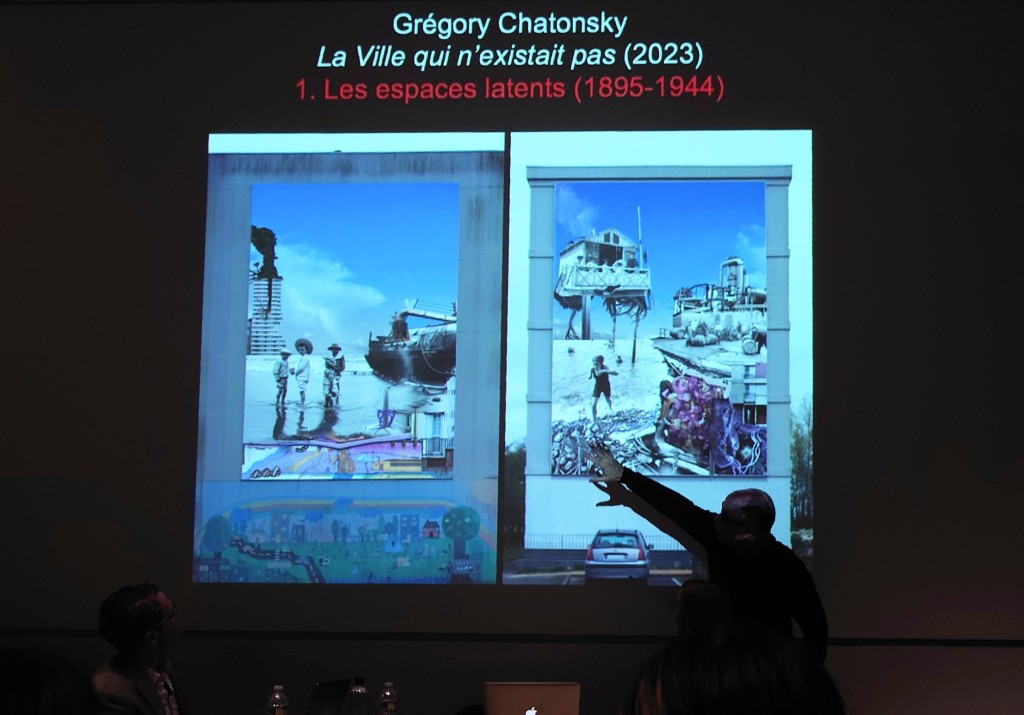

Kate Crawford pointed out another interesting fact: LAION-5B doesn’t have images in it. It only has links to images. In this way, she introduces the idea of poisoning datasets, as every link in LAION-5B could have fundamentally changed the underlying representational reality. Antonio Somaini gave an example of the poisoning datasets effect in La Ville qui n’existait pas (2023), in which the visualization of a fake image about a nonexciting city could be a counterfactual to the visualization of the history of art.

The repeated iterations replace a poisoning dataset are witnessing the formation of giant feedback loops of AI-generating AI, which will further solidify the poisoned areas of invisibility. AI-generated texts and images would make up the majority of an imaging future for “LAION-50B” or “LAION-500B”. Antonio Somaini called this “A Generative Ecstasy.” An ecstasy that does not describe pre-existing images or does not try to kind of trigger the imagination of a reader, but a description, an ecstasy that pre-describes an image in order to then generate it. For prevention, the major corporations are now thinking about ways of watermarking images so that they won’t enter the AI training sets.

Nowadays, not only visual media but also music are being reduced more and more to a vast set of terms to better describe their techniques and styles for AI-generated text-to-sound models. AI is learning human sights and sounds and exploring more senses in a text-to-human way. Making AI more and more human-like and gaining access to human senses is no longer the ultimate destination for AI models. People’s enchanted determinism of AI technology has helped drive the feeling of the sublime in AI-related arts, allowing AI to be continually molded from inhuman to superhuman by artists. Nonetheless, a generative ecstasy has not yet to come. Amidst the epistemic anxiety of constant iteration and discourse, we have already entered the era of AI and art collaborations.